Stick a fork in Redis as a cache?

Redis, a widely used caching tool, changed its licensing, prompting the community to explore alternatives like Valkey, Redict, and Aerospike. Explore the impact on cloud services and the open-source software community.

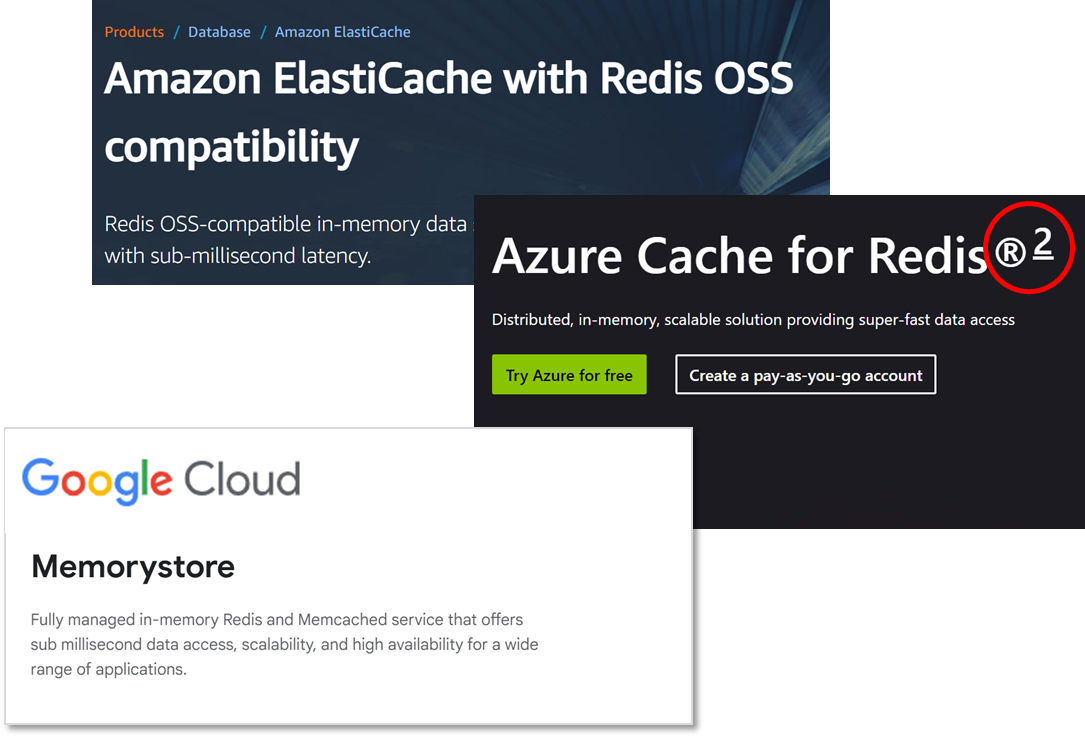

Redis has long been widely used as a cache and has become part of the services offered by hyperscale cloud players, including Azure—with its Azure Cache for Redis, Google Cloud—with its Memorystore offering, and AWS—with its Amazon ElastiCache for Redis. Each of these offerings is based on the open-source distribution of Redis, as opposed to the commercial distribution from Redis Inc.

Then, on March 20, 2024, Redis announced a change in their licensing, ostensibly to protect their commercial interests in marketing and selling distributions of Redis and associated modules, most notably as an in-memory cache.

“Starting with Redis 7.4, Redis will be dual-licensed under the Redis Source Available License (RSALv2) and Server Side Public License (SSPLv1).”

The announcement is in part directed at cloud vendors that sell caching services based on “open source Redis” and presumably not paying Redis much, if anything, for such usage.

“Under the new license, cloud service providers hosting Redis offerings will no longer be permitted to use the source code of Redis free of charge. For example, cloud service providers will be able to deliver Redis 7.4 only after agreeing to licensing terms with Redis, the maintainers of the Redis code.”

The reaction from the cloud vendors has seen them quickly adjust their current Redis-based caching offerings to the new reality:

AWS ElasticCache with Redis OSS Compatibility was called AWS Elasticache for Redis until recently. Their clarification leans heavily on open-source messaging: “Built on Redis OSS and compatible with the Redis OSS APIs, ElastiCache works with your Redis OSS clients and uses the Redis OSS data format to store your data.”

Azure Cache for Redis has a prominent footnote stating that the use of the Redis name “is for referential purposes only and does not indicate any sponsorship, endorsement or affiliation between Redis and Microsoft.”

Memorystore on Google Cloud, until recently called Memorystore for Redis, states, “Redis is a trademark of Redis Ltd. Memorystore is based on open-source Redis versions 7.2 and earlier.”

And then there were three-ish

Beyond the cloud vendors, it is safe to say that the reaction from the FOSS (free or open-source software) community was not positive. “Let the forking ensue,” stated one commentator on Hacker News. Or, as only The Register can put it, “Redis tightens its license terms, pleasing basically no one.”

The result is at least three separate Redis forks are now attacking the same problem of in-memory caching.

Valkey

Backed by the Linux Foundation, Valkey—“A Redis Fork With a Future”—is perhaps the most direct fork of Redis for in-memory caching. The project quickly got off the ground with numerous contributors from the Redis community—with day jobs at Google, AWS, Oracle, et al.

In the AWS blog Why AWS Supports Valkey, Kyle Davis, a developer advocate at AWS and Senior Developer Advocate on the Valkey project, put his finger on the sore spot:

“Redis broke with the community that helped it grow and left them stranded. This community is now unbound and will continue to use and contribute to the project as they have always done, and with more freedom.”

Therein lies the challenge of navigating the competing requirements of FOSS enthusiasts and publicly traded commercial software vendors like Redis Ltd. I’m sure that at some board strategy meeting, Redis Ltd. made a calculation based on revenue targets, margins, and stock values. They must have anticipated some backlash from Redis developers and contributors in the Redis OSS community.

Redis 7.4 and beyond

Redis Ltd. will, of course, continue enhancing and releasing Redis products with its new licensing scheme. An open question is whether Redis Ltd. can innovate fast enough to keep ahead of other OSS variants and whether there is a significant developer/committer community that will continue to participate in the Redis Ltd. project.

There is no denying that Redis’ relicensing has caused significant ill will within the broader Redis OSS community. This makes the oft-repeated refrain that “Redis is the most loved database in the world” a bit more complicated. We can all agree that database love is fickle, so it’s hard not to conclude that Redis Ltd. shot itself in the foot with this relicensing. Time will tell.

Redict: Is Redis finished as a cache?

Redict is an independent, copyleft fork of Redis introduced in March 2024, almost immediately after the Redis announcement. Andrew Kelly offers another perspective on the subject: As a cache, Redict is the finished article.

“Redict has already reached its peak; it does not need any more serious software development to occur. It does not need to pivot to AI. It can be maintained for decades to come with minimal effort. It can continue to provide a high amount of value for a low amount of labor. That's the entire point of software!”

Can IT organizations get by with Redis 7.3.X forks a la Valkey or Redict? The Valkey team has pledged to introduce new features to their project, but considering how quickly the Valkey project got off the ground, it’s clear the hyperscalers looked first at protecting their Redis-based caching solutions.

So, is Redis feature-complete as a cache? If Redict/Valkey really doesn’t “need any more serious software development,” does the discussion end here?

Is it time to rethink caching?

Caches are, almost by definition, a tactical solution. Application architects never start their designs by choosing a cache and going from there. They build their data pipelines, choose their data sources, craft their algorithms, and then identify performance bottlenecks. In an ideal world, caches are not necessary. On top of that, caching doesn’t work the way you think it does, as my colleague Behrad Badaee has written. He argues that end-user functionalities typically require multiple database accesses, thereby increasing the likelihood of a cache miss and, therefore, defeating the purpose of the cache.

In his article in The New Stack, Behrad takes it a step further, asking, “Is caching still necessary?” He covers both sides of the argument, saying that there are cases where caching makes sense, but in many cases, it doesn’t. “Introducing a caching layer in front of a database, whether external or internal, has limited effectiveness in improving the performance of an application suffering from slow data access.”

For your consideration: Aerospike

For the times it does make sense, how do you take a tactical solution and make it strategic? One way is to consolidate cache instances into a real-time database like Aerospike, which can function as a smarter cache while using far less infrastructure and not depending on all data residing in memory. Aerospike’s Hybrid Memory Architecture relies on highly parallelized flash IO to offer real-time responses on less expensive infrastructure. With the addition of a new precision LRU cache eviction in Aerospike 7.1, people searching for a strategic caching platform should take a look.