What is a transaction processing system?

Explore the vital role of transaction processing systems in managing business transactions. Understand real-time vs. batch processing and OLTP vs. OLAP systems.

Transaction processing systems handle the collection, storage, and processing of business transactions. These systems ensure the integrity and efficiency of routine financial transactions by managing data entry, updating, and retrieval while maintaining important records. A transaction processing system is designed to manage transaction records that emerge from various business activities, such as sales, purchases, and payments.

At their core, these systems are built to support transactions that are reliable and processed correctly. This involves a sequence of operations that are completed entirely or not at all, ensuring the data's accuracy and consistency. A transaction processing system must manage large amounts of transaction data while ensuring data management for businesses that need real-time processing.

The architecture of a transaction processing system often involves a combination of hardware and software that work together to automate and streamline business transactions. These systems are important in sectors such as finance, retail, and logistics, where they process customer orders, manage inventory, and handle billing and payments. By efficiently managing data processing, transaction processing systems help the business work better and produce data to make better decisions.

In summary, a transaction processing system is an integral component of today’s businesses. It handles financial transactions and helps organizations maintain accurate and up-to-date records. These systems help process transactions swiftly and securely, supporting business operations across industries.

OLTP vs. OLAP

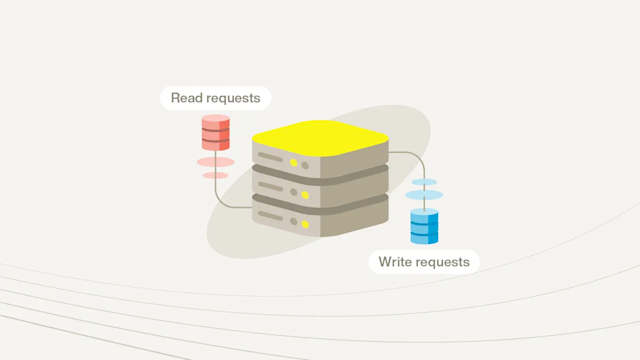

Online transaction processing (OLTP) systems are designed to manage transaction-oriented applications more easily. These systems handle many short online transactions, such as insert, update, and delete. The main goal of OLTP systems is to process queries quickly and correctly, making them useful in environments where data changes frequently. Common applications include retail sales, banking, and order entry. OLTP systems often normalize data or convert it into multiple tables to minimize redundancy, so each transaction is processed efficiently and accurately. Inversely, some NoSQL databases often denormalize data for the purposes of speed.

Online analytical processing (OLAP), in contrast, focuses on retrieving data for analysis and reporting. OLAP systems are optimized for read-heavy operations that require complex queries to extract insights from large amounts of historical data.

Unlike OLTP, OLAP often denormalizes the information in a database, or keeps all the data in one table, or “one big table” (OBT), even though data might be duplicated. This duplication happens when trying to denormalize data to reduce the joins across various tables. This prioritizes faster query performance over storage efficiency. This is particularly beneficial for business intelligence operations that perform multidimensional analysis and generate reports. Multidimensional analysis is possible with the help of frameworks such as an OLAP cube, which collects, visualizes, and explores data across different dimensions.

Comparative analysis

| Primary use | Online transaction processing | Online analytical processing |

|---|---|---|

| Transaction type | Short and frequent | Long and complex |

| Data volatility | High | Low |

| Database design | Normalized for data updates and data integrity efficiency | Denormalized for speed |

| Query complexity | Simple queries | Complex queries |

| Main objective | Data integrity and speed | Insight generation and decision support |

| Example applications | Retail sales, banking, order management | Data mining, market research, business reporting |

The distinction between OLTP and OLAP is important for IT professionals and business analysts who want to optimize their systems for either transaction processing or data analysis. While OLTP systems are used for operations that require constant data updates or very fast retrieval, OLAP systems are used for strategic planning and analytical assessments.

Two types of transaction processing systems

Real-time processing captures transactions quickly, updating databases within milliseconds. Here’s a real-world example: When a customer uses a credit card at a retail store, the system checks for available funds and validates the transaction if it believes the transaction is non-fraudulent. The system then updates the available funds on the credit card and inserts the transaction into a transactions table. This process keeps databases accurate and quickly updates businesses in operations where timing is critical. Real-time processing is important in environments such as online banking or stock trading, where delays could result in financial discrepancies or missed opportunities.

In contrast, batch processing involves collecting data over a period and processing it all at once. Instead of immediate updates, transactions are accumulated and processed at scheduled intervals, such as nightly or weekly. Utility companies often use this method for billing, gathering usage data over a month, and generating invoices at one time. Batch processing efficiently handles large amounts of data without requiring immediate updates, making it cost-effective and computationally less demanding.

Each method serves a different business need. Real-time processing is important for applications requiring up-to-the-moment accuracy and responsiveness, while batch processing is useful in scenarios where periodic updates are enough, which uses fewer resources. Typically any application interacting with customers involves real-time processing; back office systems may use either real-time or batch processing. Organizations often use a combination of both, taking advantage of the strengths of each depending on the application.

Components and functions of transaction processing systems

Understanding the components and functions of a transaction processing system is important to make sure they work well.

Inputs

Inputs in a transaction processing system are primarily transaction records and data that enter the system. These can include sales orders, payments, inventory adjustments, or any other pertinent business information. The input phase collects raw data from various sources, often through an automated method, such as payment terminals, or by manual data entry. This data must be accurate and complete so later processes are correct.

Processing

The processing system transforms input data into meaningful information through various data processing techniques such as calculations, classifications, sorting, and summarizing data, to create useful outputs. The system applies business rules and logic so each transaction follows defined protocols and procedures. Processing efficiency governs the overall performance of a transaction processing system, as it determines how quickly and accurately data is processed and available for decision-making.

Outputs

Transaction data and output data (or “outputs”) are the results of the processing phase. Outputs provide information and actionable insights, such as reports, invoices, receipts, and acknowledgments, that keep businesses running. Outputs must be timely, accurate, and accessible so they meet user needs. They also assist in generating customized reports that support strategic planning and operational adjustments.

Database

The database stores a comprehensive and organized repository of transaction data. It keeps data accurate, secure, and available for operations that need consistent access to historical and current transaction information. Effective database management is important for quick retrieval and updates, so businesses can maintain accurate records and comply with regulatory requirements.

ACID criteria

ACID compliance is a mission-critical database requirement that ensures the data integrity of operations even in high-concurrency environments. Relational databases, for one, have long relied on ACID compliance principles to guarantee consistency. Meanwhile, modern distributed, NoSQL systems prioritize availability and scalability, which can be at the expense of strict transactional guarantees. It is important to note that strong consistency and ACID compliance are not one and the same; strong consistency guarantees that the same data is seen at the same time by any and all users. ACID transactions are stricter, as they enforce atomicity, consistency, isolation, and durability. For large-scale applications, knowing these particularities is crucial to maintaining both strong consistency and high performance.

Here are the four pillars of ACID compliance:

Atomicity means each transaction is processed as a single unit, so either all operations within the transaction are completed or none are. This is important for keeping transactional data reliable. If a failure occurs during the transaction, the system rolls back any actions, leaving no partial results. For instance, in a banking transaction, if money is to be transferred from one account to another, both the debit and credit operations must be completed. If one fails, both revert to the original state so the database remains consistent and reliable. This prevents data corruption.

Consistency involves maintaining the integrity of the database by ensuring that any transaction brings the database from one valid state to another. This means data follows all predefined rules, such as constraints, cascades, and triggers. When a data transaction occurs, it must uphold the integrity conditions stipulated for the database. For example, if a system requires that the total amount of funds in all accounts must remain constant after a series of transactions, any operation that violates this rule would be deemed inconsistent and, therefore, rejected. This principle makes sure that all data remains accurate and dependable across transactions.

Isolation means that transactions are securely and independently processed at the same time without interfering with each other. This way, the operations of one transaction do not affect those of another, even when they are executed at the same time For example, if two users attempt to modify the same data simultaneously, the system ensures that one transaction is completed before another begins, preserving data integrity and preventing the "lost update" problem. Isolation is an important part of keeping transactional processes stable and reliable

Durability means that once a transaction has been committed, it remains so, even in the event of a system failure or crash. That way, the effects of a committed transaction are permanently recorded in the database. Systems do this through various mechanisms such as write-ahead logging, which logs transactions in a separate file before applying them to the database, and shadow paging, which creates a “shadow” copy of the database. For instance, once a transaction to withdraw money from an ATM is confirmed, the system must ensure that this change is never lost, even if a power failure occurs immediately afterward. Durability is essential for maintaining user confidence and ensuring that the system's data is always up-to-date and accurate.

Typically, ACID is used in the context of SQL databases. Some NoSQL databases were designed to relax strict transactional guarantees in favor of scalability and availability. For example, several NoSQL databases follow BASE (basically available, soft state, eventual consistency) principles, which generally align with the CAP Theorem's rules for consistency, availability, and partition tolerance in distributed systems. That means that instead of enforcing immediate consistency, this model promises data availability by spreading and replicating it across the nodes of a database cluster. BASE allows consistency to be tunable, which enables developers to choose between the trade-offs of availability and data accuracy. Many distributed databases provide configurable consistency levels (e.g., ONE, QUORUM, or ALL), enabling applications to balance performance and correctness based on requirements.

Transaction processing in distributed databases

Distributed databases handle transactions across multiple networked nodes. One transaction may span several nodes, so all involved systems need to coordinate to maintain the ACID principles. The system must ensure that either all operations in a transaction are completed or none are, preserving data integrity.

Keeping data consistent across multiple systems is crucial in distributed transactions. In Aerospike’s strong consistency mode, acknowledged writes are not lost, but the reads are configurable—allowing applications to define the acceptable level of data staleness based on their needs. This requires synchronization to keep distributed nodes aligned so that any transaction maintains the ACID properties across all nodes. This can be challenging due to network latency and potential failures, but it is vital for applications demanding real-time data accuracy.

Scaling distributed systems involves techniques such as shared-nothing architecture and database clusters. In a shared-nothing setup, each node is independent, which reduces contention and lets the systems work in parallel. This approach supports scalability by allowing the system to expand horizontally, adding more nodes to handle increased loads. A database cluster also distributes data across several nodes, which can work independently or in coordination, making the system more available and fault-tolerant.

Distributed transaction systems must navigate complexities such as network partitions and node failures, which can disrupt operations. Strategies such as using redundancy, or having multiple copies of the data, and consensus algorithms, where the majority of the replicated nodes agree on the data, help keep the system reliable. Although these systems offer robust solutions for managing large-scale transactions, they require careful design and implementation to balance performance with data integrity.

Aerospike’s approach to distributed transactions

Many distributed databases operate on a quorum-based consensus algorithm like RAFT, where a “leader” updates and replicates the data. While effective, a RAFT consensus approach can introduce latency and availability trade-offs. Aerospike leverages a non-quorum consensus model that ensures strong consistency without the quorum system overhead. This allows Aerospike to deliver ACID-compliant multi-record transactions with low latency, even at scale. By combining high availability with transactional integrity, Aerospike provides enterprises with a modern distributed database that meets both performance and consistency demands.