Aerospike Database 7.1: Caching and storage pick up speed

Explore how precision LRU cache eviction and optimized device persistence elevate performance in this release.

Today marks another important product milestone: the release of Aerospike Database 7.1. It builds on the innovations of Aerospike Database 7.0, which unified our storage format for in-memory, SSD, and persistent memory (PMem) storage engines. This release enables enterprises to consolidate expensive legacy cache and database platforms. Database 7.1 introduces precision least recently used (LRU) cache eviction within our database core, expanding our ability to drive enterprise-grade in-memory caching use cases.

This latest version of our database builds on 7.0’s storage flexibility by adding support for low-cost, persistent cloud block storage like AWS Elastic Block Storage (EBS), popular on-prem enterprise network-attached storage (NAS) devices, and networked NVMe-compatible block storage. In addition to this significant storage optimization for networked NVMe block storage, Database 7.1 includes a set of capabilities that enhance the ease of deployment and management of Aerospike Database clusters by our enterprise customers.

LRU cache eviction behavior

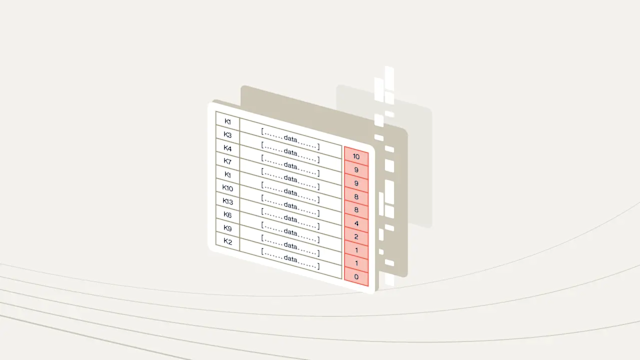

Until version 7.1, Aerospike Database had one time-to-live (TTL) based eviction behavior, which decided the order by which records get removed once a namespace eviction threshold is breached (similar to the volatile-ttl eviction policy in Redis). When evictions kick in, the records closest to their expiration are deleted first.

This release adds a new LRU eviction behavior (similar to the volatile-lru eviction policy in Redis), which is controlled by the default-read-touch-ttl-pct configuration for namespace and set levels or optionally assigned by client read operations. With this mechanism, the first read that occurs within a defined percent from the record’s expiration will also touch the record (in the master and replica partitions), extending its expiration by the previous TTL interval (calculated as the delta between the record’s void-time and last-update-time).

This approach provides an efficient persistence of record read activity, resulting in higher precision LRU evictions than a sampling-based implementation used by other in-memory caches. As a result, a namespace configured to allow record TTLs will efficiently retain the freshest records without requiring any application-side workarounds.

Aerospike Database namespaces can be configured to serve a range of deployments, from a database with linearizable strong consistency (SC) to an in-memory cache with fantastic performance, durability, inline compression, and fast restart capabilities. By default, namespaces are not configured to allow eviction of data, with stop-writes settings acting to prevent applications from overfilling the namespace (similar to the noeviction policy in Redis). In many cases, namespaces are configured as Available and Partition-tolerant (AP), and records are assigned a TTL, either through a default-ttl period configured for the namespace (or at the set level) or assigned by client policy from the application.

For a deeper dive read our blog, Announcing new features to boost LRU cache performance.

Optimized device persistence

Aerospike 7.1 adds optimizations for lower-cost, networked NVMEe block storage, including cloud-based block storage and traditional Network-Attached Storage. Aerospike persists records to data storage in constant-sized write-blocks, which maximizes throughput for high TPS use cases. Before Database 7.1, a write block was flushed to disk either when it was full or when triggered by the flush-max-ms timer. This approach had a downside in lower-throughput scenarios when partially full write-blocks were written to disk in use cases dominated by timer flushes.

Database 7.1 optimizes persistence to storage devices by writing incoming records in flush-size units, appended to each other until the write-block is filled. This involves several configuration changes. There is no longer a write-block-size configuration parameter, and all write-blocks now have an 8MiB size. The conservative approach when upgrading to Database 7.1 is to configure flush-size to the write-block-size value from the namespace configuration used in the previous version being used. The max-record-size configuration should be explicitly used to limit records, as you can no longer rely on the write-block-size for this purpose. It now has a default value of 1MiB.

In terms of performance, we have seen equivalent max throughput in Database 7.0 and 7.1 when comparing a 128KiB flush size against a 128KiB write-block size. We see improved max throughput in Database 7.1 when comparing a 128KiB flush size with write-blocks sized 1MiB or larger in Database 7.0. There is also an ease of use improvement - the flush-size is a dynamic configuration, which can be easily increased or reduced, as opposed to write-block-size, which is a static configuration that can only be raised; otherwise, the storage device must be erased.

In a related change, the post-write-queue configuration parameter, expressed as a number of write-blocks (of a variable write-block-size), has been replaced in Database 7.1 by the post-write-cache, expressed in bytes per device. This new dynamic configuration is very similar to the max-write-cache.

Database 7.1’s new storage persistence mechanism avoids excessive padding when a partially filled write-block is persisted to disk, and reduces wasted disk space. When combined with NVMe-compatible network-attached storage (NAS) such as EBS, there is considerable efficiency gained by sending only the recently written data with no padding - less bytes on the wire, less bytes in storage.

Another change to device persistence is that flushes to disk (by separate threads) are now done in a non-blocking way. If the storage device has a transient period of being slow to complete its writes, the service threads will continue writing records into streaming write buffers (SWBs) and delay flushing until the drives catch up. These changes reduce backpressure from EBS (and similar persistent devices), making for a better cloud-native solution.

Other operational improvements

Database 7.1 includes numerous operational improvements for enterprise customers.

Reduced CPU consumption

Optimized encryption at rest by encrypting just before flushing to the storage device instead of writing each record into the SWB, reducing CPU usage by storage encryption.

Capacity planning

Capacity planning is made easier by adding indexes-memory-budget, which helps control the amount of memory a namespace can consume for its primary, secondary, and set indexes. This configuration parameter acts as a stop-writes threshold, stopping client-writes with error code 8 (out of space). The evict-indexes-memory-pct is the new eviction threshold associated with the indexes memory budget.

Auto-reviving dead partitions for smoother operation of SC clusters

In strong consistency (SC) deployments, the Aerospike Database will respond to a failure of more nodes than the namespace replication factor by marking the partitions on those nodes as "dead" and requiring the operator to investigate and manually revive them.

In Database 7.1, a new (optional) auto-revive configuration parameter removes the need for manual intervention for a few scenarios in which data loss is less likely. An SC namespace using this configuration will automatically revive its dead partitions when it restarts, and client traffic to the nodes is allowed.

Faster incident handling with better logging and metrics

In every server release, we attempt to reduce the time needed to resolve support incidents by enhancing our logs and metrics.

Partial flush metric

To know if device persistence is dominated by partial flushes, Database 7.1 adds a pair of new storage-engine metrics. partial_writes tracks the number of write-blocks that were filled through incremental partial flushing, and defrag_partial_writes tracks the number of write-blocks partially flushed by the defragmentation process. The pair appears in storage-engine.device[ix], storage-engine.file[ix] and storage-engine.slice[ix] groups of metrics returned by the namespace info command. Compare these to the writes and defrag_writes metrics tracking the total number of write-blocks written.

This metric is also dumped regularly to the log as drv_ssd log lines.

In workloads dominated by partial writes, it is best to configure flush-size to be relatively small, 128KiB, or even less.

Tracking read-related device IO errors

Relevant only for storage-engine.device, the new read_errors metric counts the number of device IO errors encountered while performing read operations. It was added to the per-device statistics (alongside defrag_reads and similar). This metric is also dumped regularly to the log as drv_ssd log lines.

Records per batch request

The new batch-rec-count histogram dump appears in the log each ticker interval, following the batch-index latency histogram. It records the number of records per batch requests sent to the cluster node in logarithmic buckets. This histogram is global and not provided per-namespace.

Partitions created by data migration

The new migrate_fresh_partitions namespace statistic (for both AP and SC namespaces) also appears in the partition balance log lines as “fresh-partitions.”

Metrics and logging for new features

The following metrics and log lines are associated with the new Database 7.1 features.

Namespace index memory monitoring

If you configure a namespace with a non-zero indexes-memory-budget, the new indexes_memory_used_pct metric shows how close it is to maxing out its budget. It can be returned by the namespace info command.

LRU statistics

The following track how frequently a read operation triggers a touch of the record in the master and replica partitions is tracked by read_touch_tsvc_error, read_touch_tsvc_timeout, read_touch_success, read_touch_error, read_touch_timeout, and read_touch_skip.

The read_touch_skip indicates how many touches were abandoned upon finding that it already has taken place or is taking place, removing the need to proceed.

Security enhancements

When a new node joins an Aerospike Database cluster, its role-based access control (RBAC) information is transmitted to it as system metadata (SMD) from other cluster nodes. Until the SMD transmission is completed, this security-enabled node will only have one admin user. In Database 7.1, an alternative password (other than “admin”) can be configured in default-password-file to be fetched from a file, an environment variable, or via the Secret Agent.

Relocatable RPM packages

Starting with Database 7.1, the Red Hat package manager (RPM) can be used to relocate installing the server files away from /usr, /etc and /opt using the --relocate flag.

rpm --relocate /opt=/aero/opt --relocate /etc=/aero/etc --relocate /usr=/aero/usr -Uvh <aerospike-package.rpm> After RPM relocation, you will need to run the Aerospike daemon (asd) directly rather than through systemctl.

Before starting up asd, you must manually execute (or automate) the same tasks described in Aerospike Daemon Management. This includes setting kernel parameters and increasing the file descriptor limit. Our systemd init script normally executes these tasks.

Inside the configuration file, you would need to adjust work directory and

user-pathto the alternate directory paths.Then, you can start

asdfrom command-line with the --config-file option pointing to the configuration file.

Developer API changes

LRU read-touch policy

As described above, applications can override the namespace/set-level LRU eviction configuration by sending an explicit Policy.readTouchTtlPercent along with read and batch-read operations.

A value of -1 explicitly instructs the server to avoid touching the record, a value of 0 instructs it to use the server-side default-read-touch-ttl-pct configuration, and values between 1-100 specify a percent-of-expiration threshold for the read-touch behavior.

Relaxed AP queries

Being able to mark queries as ‘short’ was an optimization introduced in Aerospike Database 6. When a cluster size is changing, short queries relax the condition that a partition must be full before it can be queried. Database 7.1 introduced the ability to similarly relax a long query if the cluster size changes through a new QueryPolicy.expectedDuration field. The values SHORT and LONG replace the true/false values for the deprecated QueryPolicy.shortQuery field. The new value LONG_RELAX_AP allows a developer to determine that this long query should relax consistency when querying an AP namespace in a cluster undergoing data migration (rebalancing). This is mainly useful for long queries that, in reality, return only a small number of records.

Strict enforcement of map key types

After progressively restricting map keys to scalar data types in newer client releases, Database 7.1 explicitly enforces that map keys are string, integer, or bytes. A client trying to write map data that contains map keys with disallowed data types will get rejected with an error.

You should use the latest version of Aerospike’s asvalidation tool to sample or scan entire sets or namespaces for invalid map keys. If such records are found, you must modify your applications to use supported map key data types before upgrading to Database 7.1.

Try Aerospike Database 7.1

Expanding on Aerospike Database 7’s persistence, fast restart, and inline compression capabilities with the new LRU eviction behavior makes Database 7.1 a fantastic in-memory cache solution. LRU cache evictions are available for any type of storage engine—in-memory, SSD, and PMem.

Optimized persistence to storage devices improves throughput, stability, and efficiency, especially when using NVMe-compatible block storage.

Consult the platform compatibility page and the minimum usable client versions table. For more details, read the Database 7.1 release notes and upgrade instructions. You can download Aerospike Enterprise Edition (EE) and run it in a single-node evaluation or get started with a 60-day multi-node trial.