Estimate AVS scaling needs

Overview

Aerospike Vector Search (AVS) can be scaled horizontally to accommodate large indexes and high throughput SLAs. This page provides guidance for scaling your cluster based on your use case needs.

Choosing a specialized node role

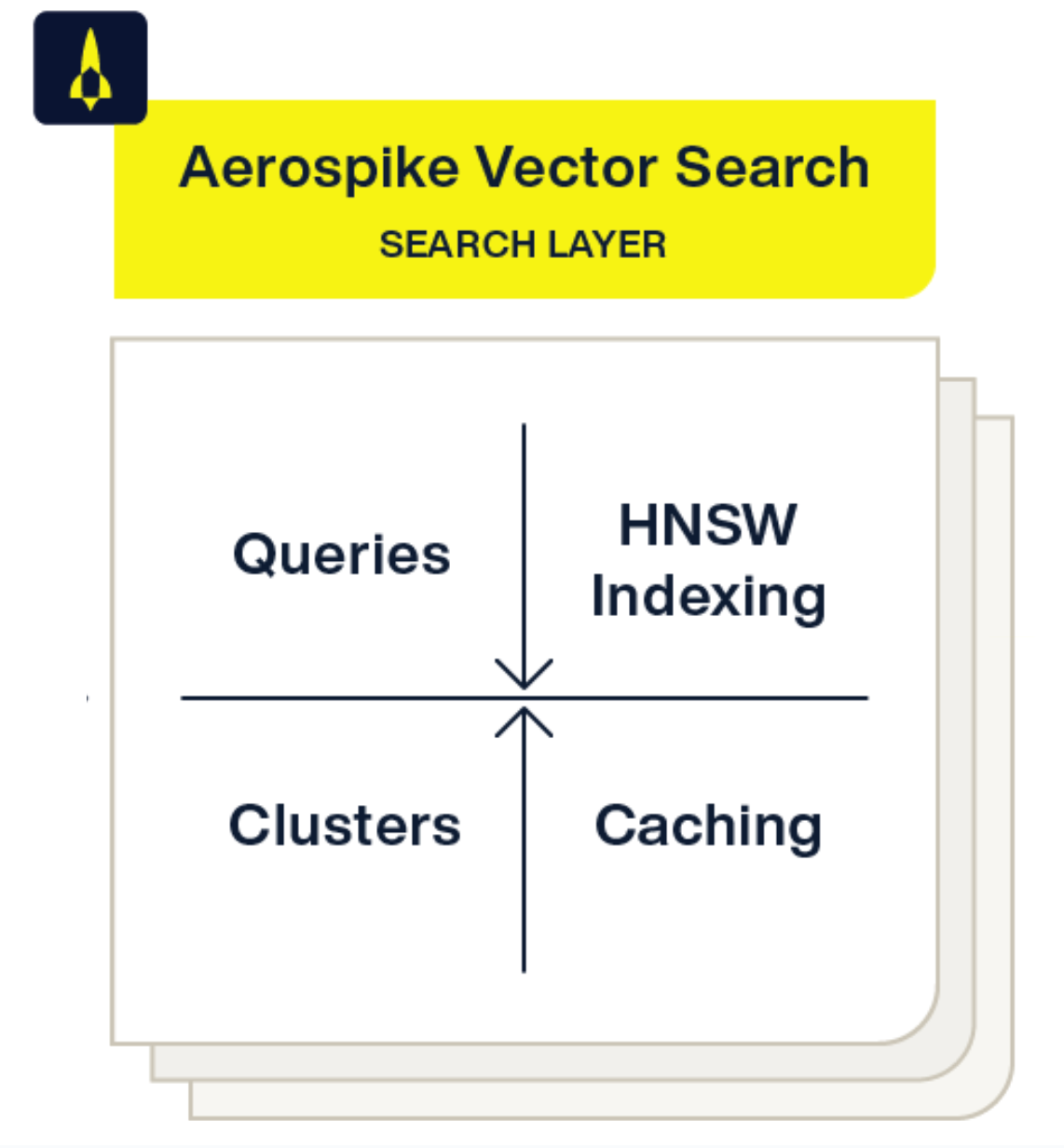

By default, AVS nodes perform indexing and querying simultaneously. If you need to isolate and scale a node to meet indexing or query SLAs, you can configure that node to perform just one of the two operations, which are as follows:

- Query and Caching - Nodes available for query perform HNSW traversals and cache the index and results in memory.

- Indexing - Nodes available for indexing handle upsert calls and HNSW index healing.

If you follow our Kubernetes deployment guide, you will deploy two query nodes and one indexing node, by default.

See the configuration guide for specific configuration settings.

You can check the node roles of your cluster using the asvec CLI tool.

Scaling for query latency

A common scaling scenario is to reduce search times by ensuring your index is fully available in the AVS cache. You can accomplish this by allocating enough memory to hold the entire index on each node, or across nodes. In effect, there are three different performance modes you will see based on this distribution.

The following query latency figures are estimates based on sufficient CPU headroom, no TLS configured, default index configurations, and top-K of 100 on a 100-dimension vector space. It is important to consider your recall goals in addition to latency when choosing an SLA.

| Caching Level | Cache Hit Ratio | p50 | p95 | p99 |

|---|---|---|---|---|

| Index cached on every AVS node | 100% | 2.5ms | 5ms | 7.5ms |

| Index cached across AVS nodes | >99% | 10ms | 25ms | 50ms |

| Index mostly cached | >90% | 10ms | 150ms | 200ms |

| Index partially cached | <50% | 100ms | 500ms | 1.25s |

While you can plan for your cache distribution by calculating your index size, you should closely monitor for cache hit ratio when optimizing cache performance. In addition to scaling your cluster, configure cache size and expiration for individual indices.

Cache distribution

Each index has unique latency characteristics depending on its size and the memory capacity of each AVS node. You can adjust the cache expiration period to prevent cooling of the cache and improve performance.

- Index cached on all nodes - For smaller indexes, AVS builds the cache automatically on every node and performs queries in memory without steering to another node.

- Index cached across nodes - For larger indexes where performance is a concern, distributing your index across the nodes in your cluster ensures that AVS performs queries in memory. Steering queries to other nodes may be required, resulting in an increased likelihood of cache misses.

- Partially cached indexes - For larger indexes where spikes are not a concern, the default

cache settings distribute and expire the cache across your AVS nodes. This configuration

optimizes memory use in AVS for frequent queries, but it does not have a minimum memory requirement.

Example index sizings

The following examples provide in-memory deployment patterns and various index sizes, allocating 50% of total RAM to index caching. AVS requires additional RAM for process and node management.

To calculate your index size, see our index sizing formula.

| Description | Caching Level | Records | Dimensions | Index Size | Node Size | Cluster Size |

|---|---|---|---|---|---|---|

| 1M Low Dimension | On Node | 1,000,000 | 128 | 1 GB | 32 GB | N/A |

| 1B Medium Dimension | Across Node | 1,000,000,000 | 768 | 3.5 TB | 512 GB | 16 |

| 1B High Dimension | Across Node | 1,000,000,000 | 3,072 | 12.4 TB | 1,024 GB | 32 |

| Billions High Dimension | Partially Cached | 1,000,000,000+ | 768+ | 35 TB+ | N/A | N/A |

Scaling for query throughput

AVS is designed to handle high query throughput, but transactions are queued if the host machine does not have enough CPU resources. We recommend scaling up for periods of high query throughput.

Query throughput estimates

| Description | Peak QPS | Dimensions | QPS / Core | Cluster Size* |

|---|---|---|---|---|

| Low Dimension | 100,000 | 128 | 200 | 16 |

| Medium Dimension | 100,000 | 768 | 50 | 64 |

Sizing estimates are based on a 32-core node instance. Vertically scaling beyond 32 cores has diminishing returns. Scaling beyond 64 cores results in contention and degraded performance. See Known Issues for more information.

Scaling for indexing throughput

For some use cases, prioritizing the re-indexing of your data can be a consideration for scaling your cluster.

Full index construction is done in batches to allow for real-time queries and is not optimized in memory. While you can scale out for CPU utilization, full index completion is slower than building asynchronously in memory.

Indexing throughput estimates

The following figures provide an estimate for indexing over a one-hour period.

| Ingest Throughput Scaling | Dimensions | Indexed per Hour | Node Size (CPU core) | Cluster Size |

|---|---|---|---|---|

| Low Dimension | 128 | 40M | 8 | 1 |

| Medium Dimension | 768 | 10M | 32 | 1 |

| High Dimension | 3072 | 3M | 32 | 3 |