Why your application is only as fast as your slowest transaction

Explore the role of caching in enhancing database efficiency and discover key use cases for implementing a caching layer in your stack.

“A chain is only as strong as its weakest link.” You’ve heard that expression, right? Well, for databases, the equivalent is “Your application is only as fast as your slowest transaction.” Most database operations are made up of several sub-operations. So if even one of those operations bogs down, it can bring the whole application to its knees.

Many opt to integrate a cache data layer into their stack to anticipate these interruptions and optimize overall database speed for real-time operations. While a cache is a very useful tool, it’s not a silver bullet for optimizing the speed and performance of your entire database.

So why would “millisecond latency” become important if countless applications don’t require such a stringent threshold?

For instance, your application might have a 200-millisecond threshold, which, on paper, automatically obviates the need for sub-millisecond performance. But it’s a slippery slope. With a 200 ms threshold, it’s easy to think, “A few milliseconds here or there don’t matter.” But when latencies occur on every operation, they add up. And your users will notice, even in an application where response time doesn’t seem all that critical.

How to leverage a cache

First of all, caching typically requires that the most frequently used data be kept in memory. Yes, that improves performance, but at what cost? It’s not just the cost of the memory itself but the additional servers required—not to mention the additional staff to manage the additional servers.

Caching use cases

If a cache doesn't function like a jet booster that attaches to your database to achieve supersonic speeds, what role does it play? When is it considered essential to integrate a caching layer into your stack? Some of the more prevalent uses include:

Content caching

Implementing basic content caching for media or thumbnails decreases storage requests. Retrieving commonly accessed data from the cache enhances performance without disrupting the conventional data architecture.

User session store

User profile and web history data can be used in a shopping cart, personalization in a leaderboard, and real-time recommendation engine.

Speed up access to backend data stores

Legacy mainframes, data warehouses, and relational systems were not crafted to function efficiently at cloud scale. As usage increases, the sheer volume of requests can overwhelm these systems. But they could contain datasets that may be useful for modern operational use cases. Therefore, extracting that data and putting it into a cache or an in-memory database puts that data to good use without stressing the backend system.

Caching response times visualized

Caching only helps if the data the application needs happens to be sitting in the cache. Database operations that don’t hit the cache have much longer latencies, which will be the limiting factor in how quickly a page loads or a bank account is updated.

For instance, consider an e-commerce store and all of the sub-operations that have to fire off for a single product page to load:

Retrieving comprehensive product descriptions

Loading product images and videos

Collecting customer feedback

Creating recommendations for related items

Creating a list of suggested commonly paired items

Viewing user account information

Summarizing the items in the shopping cart

Displaying recently viewed items

Showcasing current discount offers

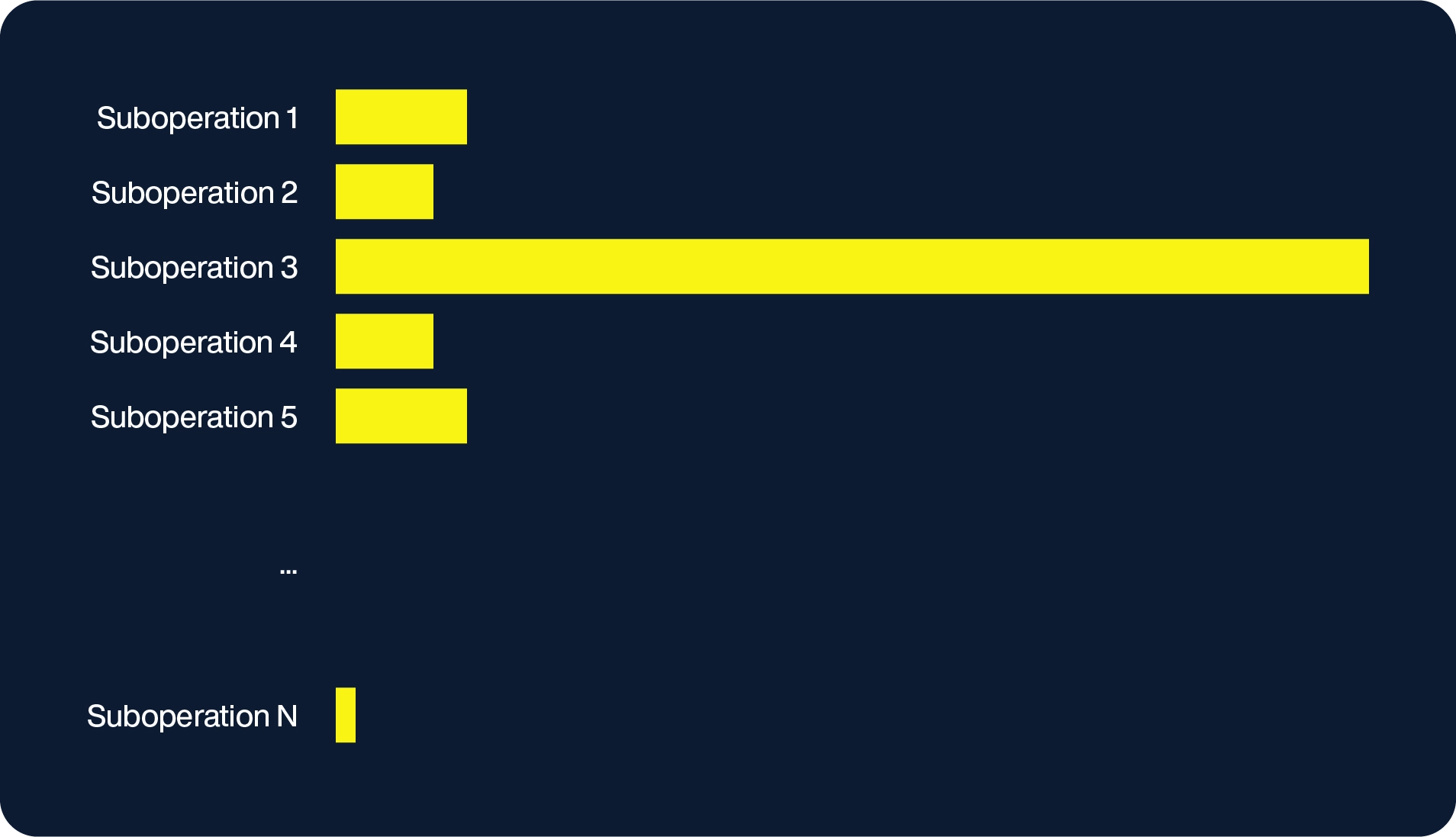

Many of these tasks require database queries. If one were to chart the time the database takes to deliver the necessary data for each sub-operation, the pattern would look something like this:

The page loading time should not exceed the duration of the lengthiest sub-operation, which, in this case, is sub-operation 5. To enhance performance efficiency, the common practice includes placing a cache in front of the database. This method changes the response times to:

What’s happening here? Certain operations can leverage the cache for quick data retrieval –thereby dramatically lowering their durations – while others necessitate direct database access, maintaining the previous higher latency. Since the overall page loading speed is dictated by the slowest task, implementing a cache has a marginal impact on the total page load time.

Cache hit rate

The cache hit rate represents the proportion of data requests fulfilled by the cache instead of originating from the server.

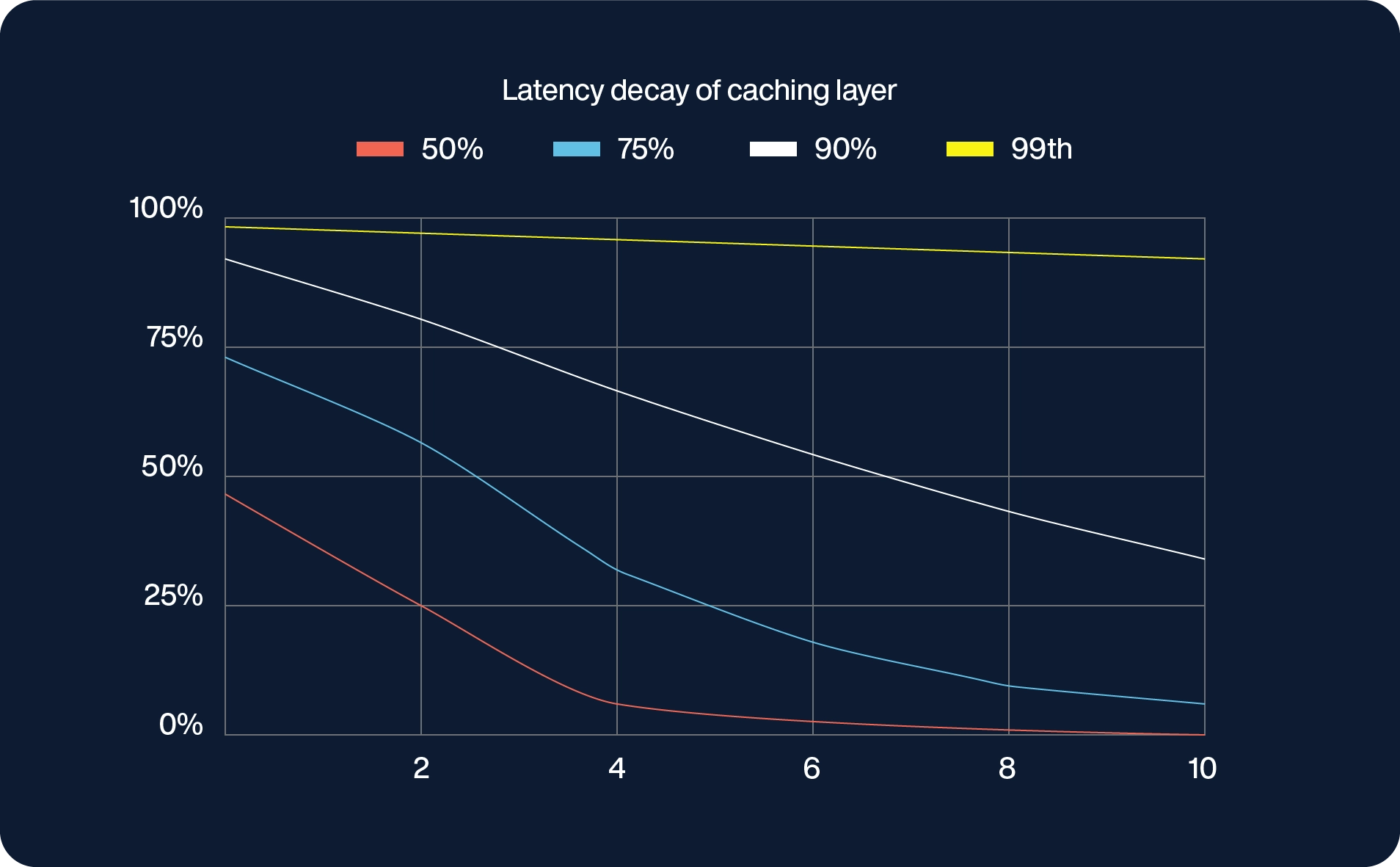

Extending cache hit rates across sub-operations

Consider this: If you have a 50% cache hit rate – which is actually pretty good – and you fulfill two operations in the task, the possibility of both of them being in the cache is 50% multiplied by 50% — or 25%. If you have four tasks on the page, the probability drops down to about 6%. In other words, the advantages of putting a cache in front of the database or adding a lot of memory in front of your disk are minimal at best.

Even if you improve your cache hit ratio to 75%, all four operations will likely be in the cache less than a third of the time.

It’s crucial to emphasize that despite an impressive 99% cache hit rate achieved through maintaining a substantial cache size, the likelihood of a page load involving five sub-operations being served exclusively from the cache would not surpass 95% (calculated as 99% to the power of 5). While a 95% efficiency rate is commendable, most enterprises strive to ensure optimal performance for 99% of user requests, underscoring a discrepancy between the desired and actual outcomes with such a caching approach.

And how often do you have a page that requires only five operations? That’s why sub-millisecond latency is important, even for applications where you don’t think you need it.

Some applications need millisecond latency

Some high-intensity applications, such as fraud prevention, artificial intelligence, and customer 360, just won’t work at scale without real-time response.

Use case: Financial services

Look at PayPal. The financial services company issues 41 million payments per day, for a total of $323 billion in yearly turnover. It has just 500 ms—the speed of human thought—to decide whether to accept or decline a transaction. And in that 500 ms, it might have up to 40 references to check to help it decide. Even a 90% cache hit rate isn’t going to make a difference.

When the 500 ms is up, the fraud detection software has to make a decision. If it couldn't decide whether the transaction was fraudulent, it would accept the risk and let the transaction go through. Typically, that amounted to about 1.5% of all transactions, creating $4.7 billion of risk yearly.

Fortunately, most of those transactions were okay. However, switching to a database with sub-millisecond accuracy reduced that percentage from 1.5% to 0.05%, which resulted in a 30x reduction in exposed risk.

The result is that PayPal’s fraud rate is 10x lower than the industry average.

Use case: e-commerce

Alternatively, consider one online furniture seller with $14 billion in annual revenue, 31 million active customers, 14 million products, and 61 million orders annually.

Like many e-commerce companies, the company lives and dies based on its recommendations and ad placements, which it calculates through data such as purchases, viewed categories, and “favorited” items. With all that data and sub-millisecond latency, the company displays ads for relevant products. It automates actions based on user behavior, such as sending app notifications to users about sales on items they've shown interest in.

The result is better recommendations, which resulted in a 6% increase in the size of customers’ carts and a 30% reduction in abandoned carts.

To cache or not to cache? Yes/Maybe/No

It is important to understand that with any complex transactional or operational use cases, caches may not work the way you think they will or should. Loading up a caching server with tons of memory will not automatically yield uniformly low-latency responses as explained above.

In controlled environments where you can ensure a very high cache hit rate (say 99% or above), a simple caching layer may yield the results you want. However, hard experience has shown that in dynamic environments where complex queries are common, extracting the data into a real-time or in-memory database layer may yield better, more predictable results.

Sub-millisecond latency: Mission-critical for your organization

If your application depends on consistent, predictably low latencies, a single errant query or database operation can drag the performance of your entire database application down, and a caching solution simply may not be able to pick up that kind of slack.

A cache has its particular functions and solves important issues for many use cases, but it’s no guarantee of sub-millisecond database performance. That really depends on the database itself. For acceptable application performance in high-intensity use cases such as fraud detection, AI, and customer 360, sub-millisecond latency isn’t just nice to have—it’s essential.